The Case for Chaos: Thinking About Failure Holistically

This is a transcript of a talk which was presented by Gremlin Engineer Pat Higgins at SLC DevOpsDays in May 2018. The slides are also available. You can find Pat on Twitter as @higgycodes tweeting about holistic failure injection, React, and all things Australian.

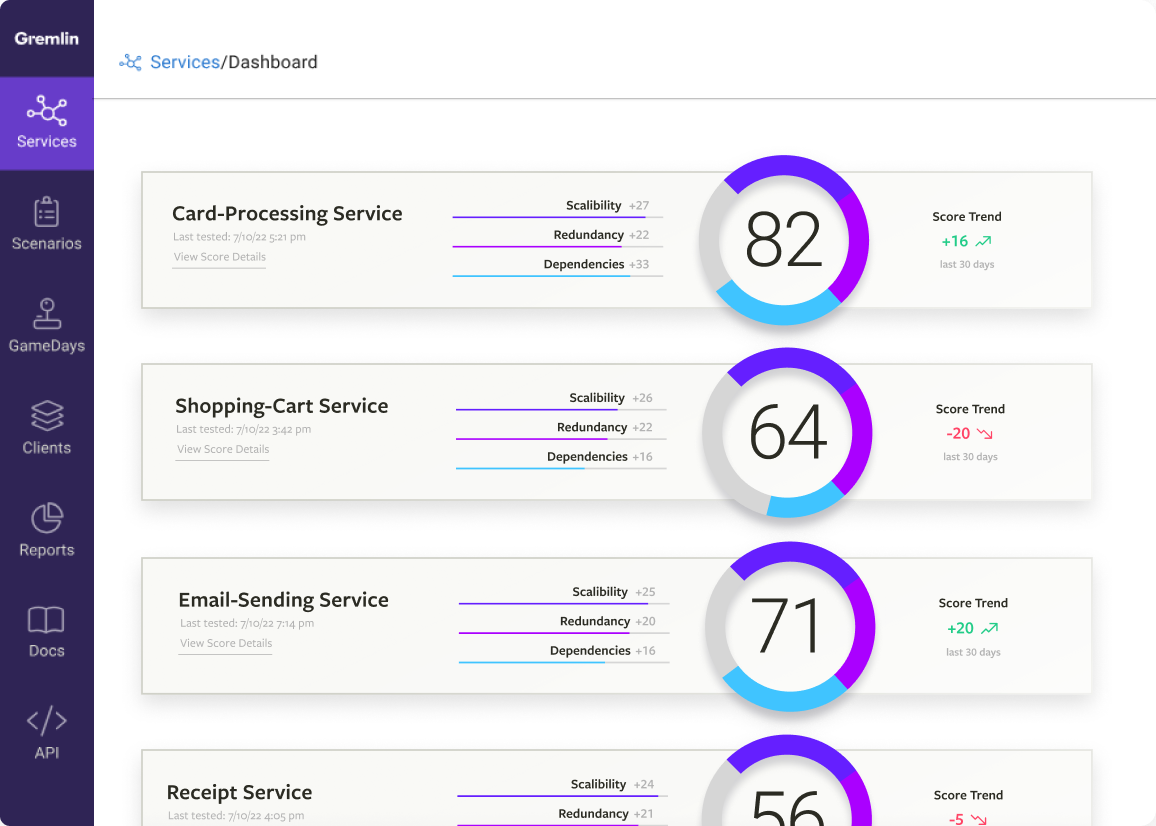

Can you see what’s wrong with this UI?

System degradation can have catastrophic implications for the look and feel of UI. It can also go totally unnoticed if failure is proactively mitigated.

What is Chaos Engineering?

Thoughtful, planned experiments to reveal weaknesses in your system. Like a vaccine, these experiments inject enough harm into your system to make it more resilient, but not enough to make it ill (Check out the history of Chaos Engineering).

Chaos is a Practice

Like learning a musical instrument, a language or going to the gym, Chaos Engineering is an iterative process. It doesn’t help to engage in Chaos Engineering as a singular event, constant practice is required to see results.

Why Engineer Chaos?

There are many reasons, depending on your role:

- Business case: Avoid the costs of downtime.

- On-call case: No one likes a 3am wake-up call.

- Engineering case: Fewer outages means more time to innovate.

Downtime is Costly

The costs of downtime are multifaceted. The most evident cost of downtime is the revenue lost because customers can’t access your product or service. Another, less quantifiable cost is damaged user trust.

And if downtime is chronic, it can drain engineering productivity and contribute to burnout. Internal services might be affected, and the process of remediating failure outside business hours can have a psychological impact on the engineers involved.

Prerequisites for Chaos

It’s important to think about building organizational structures around remediating the failure that is being proactively introduced through Chaos Engineering. Developing a High Severity (SEV) Incident Management Program can formalize the remediation process for an organization and help articulate a workflow that will be clear and consistent for experienced on-call staff and new hires.

Additionally, it's important to note that Chaos Engineering is largely an observational practice. It is essential to establish strong monitoring and paging practices that can be tested and validated through proactive failure injection.

There are also some procedural prerequisites that should accompany the organizational points mentioned above. Chaos Engineering follows a scientific process, so it's vital to establish a steady state hypothesis (control state) to compare experiment states against. Once a steady state is established, the team can articulate a hypothesis for how your system will react under duress.

Word of Warning

Chaos Engineering is not a destructive practice. It is largely concerned with exploration and experimentation, not lighting your house on fire.

Chaos Mitigation is Multifaceted

The improvements garnered from Chaos Engineering are technical and organizational in nature. The technical component pertains to the fact that your systems build resilience as weaknesses are discovered and remediated through proactive failure injection. Alternatively, teams become well trained and versed at identifying and remediating weaknesses in their systems.

What is a GameDay?

Ho Ming Li defines a GameDay as “dedicated time for teams to collaboratively focus on using Chaos Engineering practices to reveal weaknesses in [their] systems”. It's evident through this definition that GameDays are primarily organizational in nature.

Who Should Participate?

Within reason, everybody should participate in GameDays.

Why Everybody?

Everybody benefits from observing failure. This encourages those in positions with less of a SRE or DevOps focus to think critically about system architecture. In developing a deeper understanding, engineers, managers, and designers may be encouraged to collaborate in a more comprehensive manner when dealing with failure mitigation.

Additionally you may find that ‘Chaos Engineering Captains” in your organization exist in the places you might least expect. Those unexpected champions, with a diverse range of perspectives, might offer fresh ideas on how to tackle some aspects of product failure.

Be Prepared

The more people take part in your GameDays, the more well-defined your process needs to be. At a minimum, you must define the target of and parameters for the attacks you plan to run. You should also whiteboard the system architecture in advance so everyone knows the moving parts and how requests and data flow through them. Localize your attacks and start small. The smaller your blast radius, the easier it will be to identify and fix any problems you uncover.

My First GameDay

In my first few months at Gremlin, the team decided to start holding “Failure Fridays”, where we would drop everything and attack our own staging environment. The goal wasn’t just to test our systems’ resilience, but to trial new product features on ourselves to make sure they worked as expected.

At that time I had been working very hard to keep a high velocity in order to impress my new colleagues, so It was a shock to see all my new UI features buckle as targeted failure was introduced into the system. The user experience was not up to my expectations, and the experience led me to think deeply about what failure mitigation strategies could be employed in the frontend.

As I began to identify potential remedies for the problems I identified, I threw tech debt tickets into our tracker, and the UI team was able to gradually improve the resiliency of the Gremlin UI.

Holistic Failure Mitigation

Chaos Engineering and UI

UI Engineers tend to be relatively conscious of a concept of graceful degradation, but it is regularly associated with browser support and bandwidth constraint. Relatively speaking, attention is rarely paid to addressing degradation when it comes to service failure.

The information displayed in the UI could be broken into two groups:

Critical Paths—the information or functionality that is fundamental to the user’s motives (e.g., the feed shown as a Twitter user comes to the site looking for the latest news or trends).

Auxiliary Paths—information that supplements the critical path (e.g., the left hand side of a Twitter user’s profile showing the number of tweets, followers and followed). If the failure of an auxiliary path is successfully mitigated, there is a good chance that the user may not even notice or care about any prospective failure.

An example of a auxiliary path might be the card on the left hand side of a user’s twitter profile showing the number of tweets, followers, and followed, whereas the critical flow might be the Twitter feed itself as a user comes to the site looking for the latest news or trends.

Healthy State for Profile Card

Unhealthy Failure State for Profile Card

Did you notice the missing information?

Likewise, Netflix has a critical flow around the display of possible TV shows or movies to watch from their main menu, but the fact that it is usually a range of content targeted to the user’s preferences is largely an auxiliary path. If the service providing personalized content fails, a range of generic content is displayed, in so doing the system as mitigated the impact of the failure upon the user.

Within the UI ecosystem, there are a few generic tools for reproducing catastrophic failures. Additionally, utilizing end-to-end testing can only be useful if a UI engineer has a good understanding of the range of possible failure states that might confront a user.

Failure-related tooling for UIs has often been company specific (Remind used Chaos Experiments for their mobile UI), but this is changing. There are exciting open source opportunities for those who would like to delve into the question of how Chaos Engineering will pertain to UI in the future.

Chaos Engineering and Product

Product specs that map out how a product should react to failure seem anecdotally to be sparse. There is an opportunity to make failure states a concern of product engineers. Product managers and UX experts can help map out best practices and guide everyone towards creating a resilient, failure-tolerant user experience.

Useful Resources On Your Journey

Thinking about failure holistically doesn’t happen overnight. It’s a long journey and you will improve each day that you take a step. Some days you will take a step forward, some days you will take a step back. Most importantly, always learn from your experiences.

I’ve collected a range of resources to share with you that have been helpful for me on my journey, leave a comment if there is a resource that has helped you!

Dastergon’s GitHub repo of ‘Awesome Chaos Engineering’ materials is a fantastic collection of all the major open source tooling for Chaos Engineering, plus plenty of talks and guides.

Our Solutions Architect Ho Ming Li put together a great collection of templates for GameDays. This is especially useful if you are just getting started and looking to formalize the processes for your organization.

The chaos community slack is a fantastic way to dive into the community. It’s a really relaxed place for you to ask questions about Chaos Engineering and meet some of the thought leaders.

Lastly, Gremlin is hosting the first open, large-scale conference solely devoted to Chaos Engineering. It’s going to be held in San Francisco on the 28th of September 2018.

Avoid downtime. Use Gremlin to turn failure into resilience.

Gremlin empowers you to proactively root out failure before it causes downtime. See how you can harness chaos to build resilient systems by requesting a demo of Gremlin.GET STARTED

.svg)

.svg)

.svg)